As any cook knows, some liquids mix well with each other, but others do not.

For example, when a tablespoon of vinegar is poured into water, a brief stir suffices to thoroughly combine the two liquids. However, a tablespoon of oil poured into water will coalesce into droplets that no amount of stirring can dissolve. The physics that governs the mixing of liquids is not limited to mixing bowls; it also affects the behavior of things inside cells. It’s been known for several years that some proteins behave like liquids, and that some liquid-like proteins don’t mix together. However, very little is known about how these liquid-like proteins behave on cellular surfaces.

“The separation between two liquids that won’t mix, like oil and water, is known as ‘liquid-liquid phase separation,’ and it’s central to the function of many proteins,” said Sagar Setru, a 2021 Ph.D. graduate who worked with both Sabine Petry, a professor of molecular biology, and Joshua Shaevitz, a professor of physics and the Lewis-Sigler Institute for Integrative Genomics.

Such proteins do not dissolve inside the cell. Instead, they condense with themselves or with a limited number of other proteins, allowing cells to compartmentalize certain biochemical activities without having to wrap them inside membrane-bound spaces.

“In molecular biology, the study of proteins that form condensed phases with liquid-like properties is a rapidly growing field,” said Bernardo Gouveia, a graduate student chemical and biological engineering, working with Howard Stone, the Donald R. Dixon ’69 and Elizabeth W. Dixon Professor of Mechanical and Aerospace Engineering, and chair of the department.

Setru and Gouveia collaborated as co-first authors on an effort to better understand one such protein.

“We were curious about the behavior of the liquid-like protein TPX2. What makes this protein special is that it does not form liquid droplets in the cytoplasm as had been observed before, but instead seems to undergo phase separation on biological polymers called microtubules,” said Setru. “TPX2 is necessary for making branched networks of microtubules, which is crucial for cell division. TPX2 is also overexpressed in some cancers, so understanding its behavior may have medical relevance.”

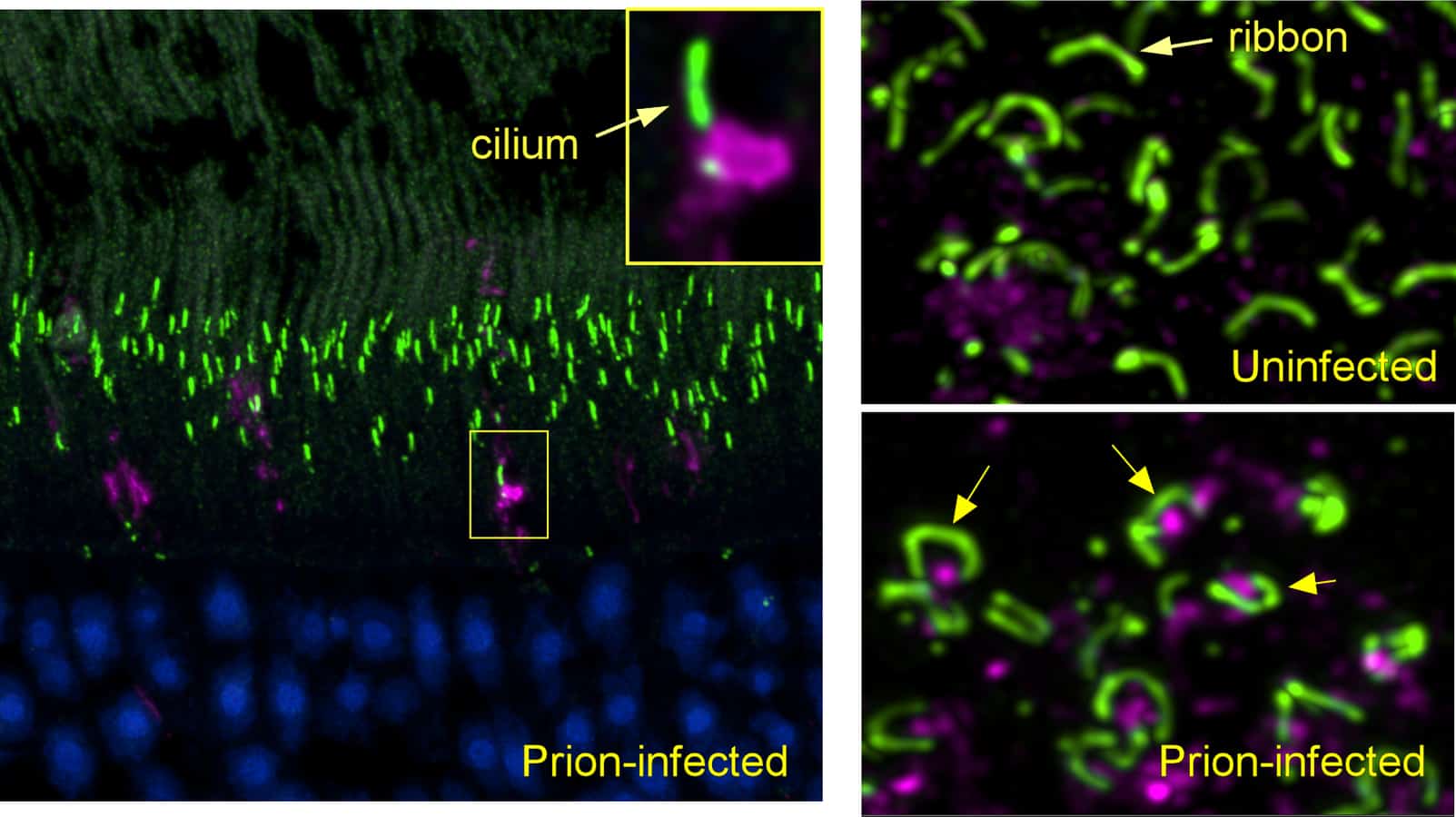

Individual microtubules are linear filaments that are rod-like in shape. During cell division, new microtubules form on the sides of existing ones to create a branched network. The sites where new microtubules will grow are marked by globules of condensed TPX2. These TPX2 globules recruit other proteins that are necessary to generate microtubule growth.

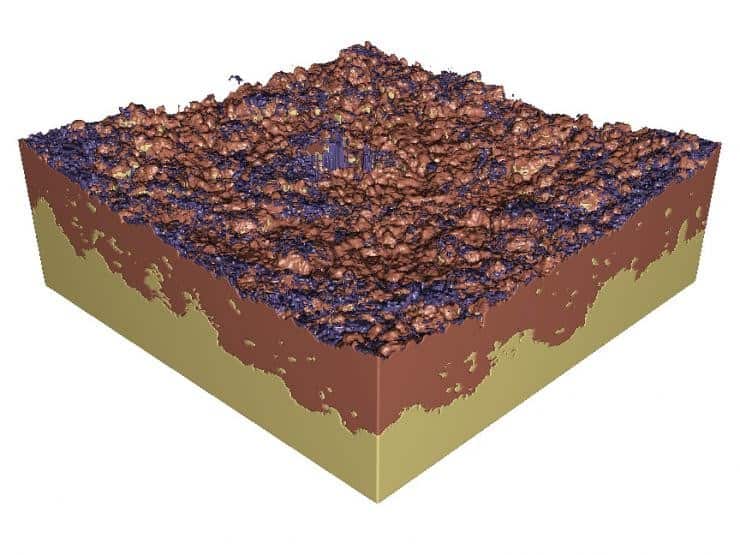

The researchers were curious about how TPX2 globules form on a microtubule. To find out, they decided to try observing the process in action. First, they modified the microtubules and TPX2 so that each would glow with a different fluorescent color. Next, they placed the microtubules on a microscope slide, added TPX2, and then watched to see what would happen. They also made observations at very high spatial resolution using a powerful imaging approach called atomic force microscopy.

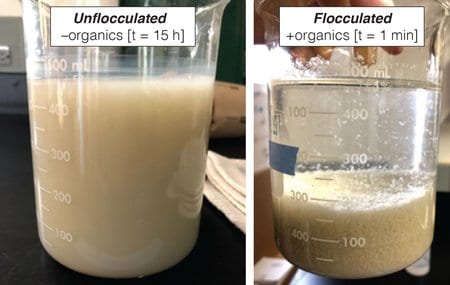

“We found that TPX2 first coats the entire microtubule and then breaks up into droplets that are evenly spaced apart, similar to how morning dew coats a spider web and breaks up into droplets,” said Gouveia.

Setru, Gouveia and colleagues found that this occurs because of something physicists call the Rayleigh-Plateau instability. Though non-physicists may not recognize the name, they will already be familiar with the phenomenon, which explains why a stream of water falling from a faucet breaks up into droplets, and why a uniform coating of water on a strand of spider web coalesces into separate beads.

“It is surprising to find such everyday physics in the nanoscale world of molecular biology,” said Gouveia.

Extending their study, the researchers found that the spacing and size of TPX2 globules on a microtubule is determined by the thickness of the initial TPX2 coating — that is, how much TPX2 is present. This may explain why microtubule branching is altered in cancer cells that over-express TPX2.

“We used simulations to show that these droplets are a more efficient way to make branches than just having a uniform coating or binding of the protein all along the microtubule,” said Setru.

“That the physics of droplet formation, so vividly visible to the naked eye, has a role to play down at the micrometer scales, helps establish the growing interface (no pun intended) between soft matter physics and biology,” said Rohit Pappu, the Edwin H. Murty Professor of Engineering at Washington University in St. Louis, who was not involved in the study.

“The underlying theory is likely to be applicable to an assortment of interfaces between liquid-like condensates and cellular surfaces,” adds Pappu. “I suspect we will be coming back to this work over and over again.“

“A hydrodynamic instability drives protein droplet formation on microtubules to nucleate branches,” by Sagar U. Setru, Bernardo Gouveia, Raymundo Alfaro-Aco, Joshua W. Shaevitz, Howard A. Stone and Sabine Petry, appeared in the Jan. 28 issue of Nature Physics (DOI: 10.1038/s41567-020-01141-8). This work was supported by the Paul and Daisy Soros Fellowships for New Americans; the National Science Foundation (Graduate Research Fellowship Program and via the Center for the Physics of Biological Function, PHY-1734030); the National Institutes of Health (National Cancer Institute National Research Service Award 1F31CA236160, National Human Genome Research Institute training grant 5T32HG003284, National Institute on Aging 1DP2GM123493); Pew Scholars Program (00027340); and the Packard Foundation (2014–40376).

from ScienceBlog.com https://ift.tt/36qWYxM