As the United States races to achieve its goal of zero-carbon electricity generation by 2035, energy providers are swiftly ramping up renewable resources such as solar and wind. But because these technologies churn out electrons only when the sun shines and the wind blows, they need backup from other energy sources, especially during seasons of high electric demand. Currently, plants burning fossil fuels, primarily natural gas, fill in the gaps.

“As we move to more and more renewable penetration, this intermittency will make a greater impact on the electric power system,” says Emre Gençer, a research scientist at the MIT Energy Initiative (MITEI). That’s because grid operators will increasingly resort to fossil-fuel-based “peaker” plants that compensate for the intermittency of the variable renewable energy (VRE) sources of sun and wind. “If we’re to achieve zero-carbon electricity, we must replace all greenhouse gas-emitting sources,” Gençer says.

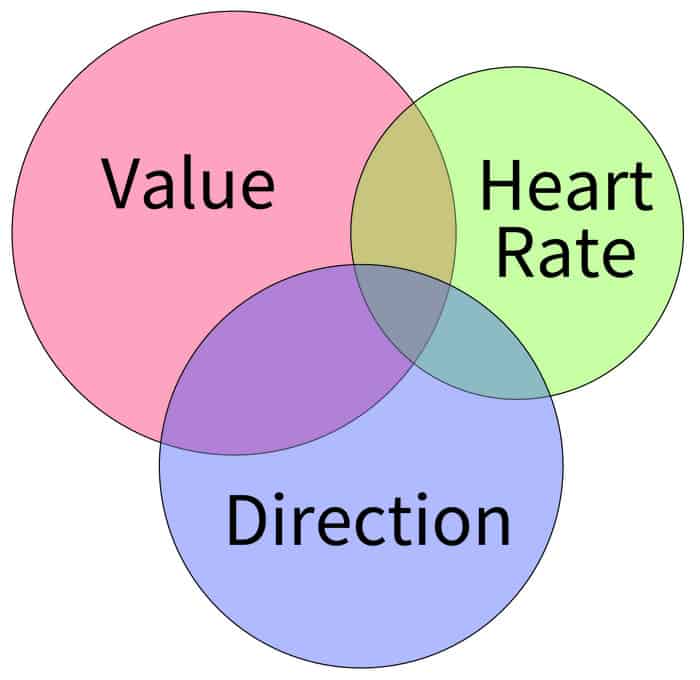

Low- and zero-carbon alternatives to greenhouse-gas emitting peaker plants are in development, such as arrays of lithium-ion batteries and hydrogen power generation. But each of these evolving technologies comes with its own set of advantages and constraints, and it has proven difficult to frame the debate about these options in a way that’s useful for policymakers, investors, and utilities engaged in the clean energy transition.

Now, Gençer and Drake D. Hernandez SM ’21 have come up with a model that makes it possible to pin down the pros and cons of these peaker-plant alternatives with greater precision. Their hybrid technological and economic analysis, based on a detailed inventory of California’s power system, was published online last month in Applied Energy. While their work focuses on the most cost-effective solutions for replacing peaker power plants, it also contains insights intended to contribute to the larger conversation about transforming energy systems.

“Our study’s essential takeaway is that hydrogen-fired power generation can be the more economical option when compared to lithium-ion batteries — even today, when the costs of hydrogen production, transmission, and storage are very high,” says Hernandez, who worked on the study while a graduate research assistant for MITEI. Adds Gençer, “If there is a place for hydrogen in the cases we analyzed, that suggests there is a promising role for hydrogen to play in the energy transition.”

Adding up the costs

California serves as a stellar paradigm for a swiftly shifting power system. The state draws more than 20 percent of its electricity from solar and approximately 7 percent from wind, with more VRE coming online rapidly. This means its peaker plants already play a pivotal role, coming online each evening when the sun goes down or when events such as heat waves drive up electricity use for days at a time.

“We looked at all the peaker plants in California,” recounts Gençer. “We wanted to know the cost of electricity if we replaced them with hydrogen-fired turbines or with lithium-ion batteries.” The researchers used a core metric called the levelized cost of electricity (LCOE) as a way of comparing the costs of different technologies to each other. LCOE measures the average total cost of building and operating a particular energy-generating asset per unit of total electricity generated over the hypothetical lifetime of that asset.

Selecting 2019 as their base study year, the team looked at the costs of running natural gas-fired peaker plants, which they defined as plants operating 15 percent of the year in response to gaps in intermittent renewable electricity. In addition, they determined the amount of carbon dioxide released by these plants and the expense of abating these emissions. Much of this information was publicly available.

Coming up with prices for replacing peaker plants with massive arrays of lithium-ion batteries was also relatively straightforward: “There are no technical limitations to lithium-ion, so you can build as many as you want; but they are super expensive in terms of their footprint for energy storage and the mining required to manufacture them,” says Gençer.

But then came the hard part: nailing down the costs of hydrogen-fired electricity generation. “The most difficult thing is finding cost assumptions for new technologies,” says Hernandez. “You can’t do this through a literature review, so we had many conversations with equipment manufacturers and plant operators.”

The team considered two different forms of hydrogen fuel to replace natural gas, one produced through electrolyzer facilities that convert water and electricity into hydrogen, and another that reforms natural gas, yielding hydrogen and carbon waste that can be captured to reduce emissions. They also ran the numbers on retrofitting natural gas plants to burn hydrogen as opposed to building entirely new facilities. Their model includes identification of likely locations throughout the state and expenses involved in constructing these facilities.

The researchers spent months compiling a giant dataset before setting out on the task of analysis. The results from their modeling were clear: “Hydrogen can be a more cost-effective alternative to lithium-ion batteries for peaking operations on a power grid,” says Hernandez. In addition, notes Gençer, “While certain technologies worked better in particular locations, we found that on average, reforming hydrogen rather than electrolytic hydrogen turned out to be the cheapest option for replacing peaker plants.”

A tool for energy investors

When he began this project, Gençer admits he “wasn’t hopeful” about hydrogen replacing natural gas in peaker plants. “It was kind of shocking to see in our different scenarios that there was a place for hydrogen.” That’s because the overall price tag for converting a fossil-fuel based plant to one based on hydrogen is very high, and such conversions likely won’t take place until more sectors of the economy embrace hydrogen, whether as a fuel for transportation or for varied manufacturing and industrial purposes.

A nascent hydrogen production infrastructure does exist, mainly in the production of ammonia for fertilizer. But enormous investments will be necessary to expand this framework to meet grid-scale needs, driven by purposeful incentives. “With any of the climate solutions proposed today, we will need a carbon tax or carbon pricing; otherwise nobody will switch to new technologies,” says Gençer.

The researchers believe studies like theirs could help key energy stakeholders make better-informed decisions. To that end, they have integrated their analysis into SESAME, a life cycle and techno-economic assessment tool for a range of energy systems that was developed by MIT researchers. Users can leverage this sophisticated modeling environment to compare costs of energy storage and emissions from different technologies, for instance, or to determine whether it is cost-efficient to replace a natural gas-powered plant with one powered by hydrogen.

“As utilities, industry, and investors look to decarbonize and achieve zero-emissions targets, they have to weigh the costs of investing in low-carbon technologies today against the potential impacts of climate change moving forward,” says Hernandez, who is currently a senior associate in the energy practice at Charles River Associates. Hydrogen, he believes, will become increasingly cost-competitive as its production costs decline and markets expand.

A study group member of MITEI’s soon-to-be published Future of Storage study, Gençer knows that hydrogen alone will not usher in a zero-carbon future. But, he says, “Our research shows we need to seriously consider hydrogen in the energy transition, start thinking about key areas where hydrogen should be used, and start making the massive investments necessary.”

Funding for this research was provided by MITEI’s Low-Carbon Energy Centers and Future of Storage study.

from ScienceBlog.com https://ift.tt/2WDy7VE