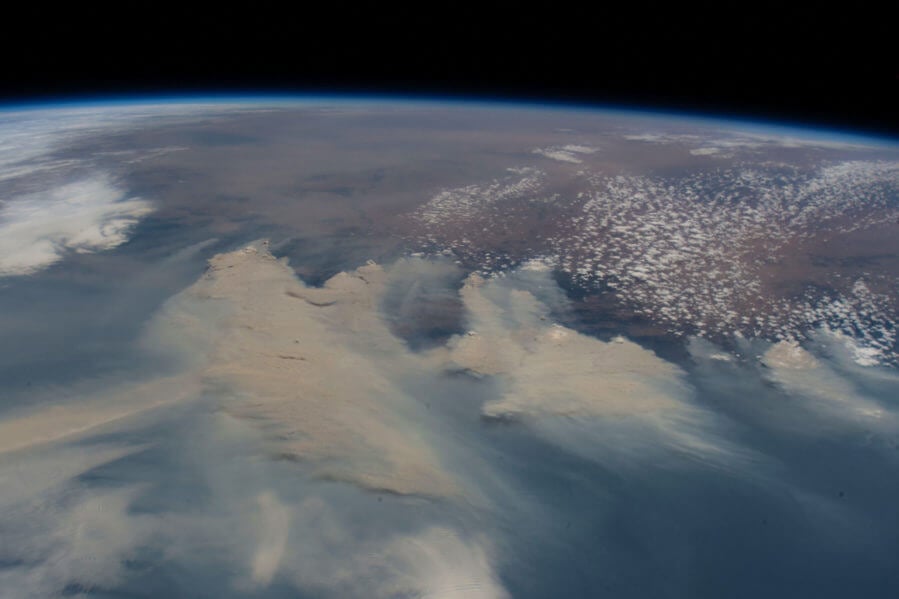

The Australian wildfires in 2019 and 2020 were historic for how far and fast they spread, and for how long and powerfully they burned. All told, the devastating “Black Summer” fires blazed across more than 43 million acres of land, and extinguished or displaced nearly 3 billion animals. The fires also injected over 1 million tons of smoke particles into the atmosphere, reaching up to 35 kilometers above Earth’s surface — a mass and reach comparable to that of an erupting volcano.

Now, atmospheric chemists at MIT have found that the smoke from those fires set off chemical reactions in the stratosphere that contributed to the destruction of ozone, which shields the Earth from incoming ultraviolet radiation. The team’s study, appearing this week in the Proceedings of the National Academy of Sciences, is the first to establish a chemical link between wildfire smoke and ozone depletion.

In March 2020, shortly after the fires subsided, the team observed a sharp drop in nitrogen dioxide in the stratosphere, which is the first step in a chemical cascade that is known to end in ozone depletion. The researchers found that this drop in nitrogen dioxide directly correlates with the amount of smoke that the fires released into the stratosphere. They estimate that this smoke-induced chemistry depleted the column of ozone by 1 percent.

To put this in context, they note that the phaseout of ozone-depleting gases under a worldwide agreement to stop their production has led to about a 1 percent ozone recovery from earlier ozone decreases over the past 10 years — meaning that the wildfires canceled those hard-won diplomatic gains for a short period. If future wildfires grow stronger and more frequent, as they are predicted to do with climate change, ozone’s projected recovery could be delayed by years.

“The Australian fires look like the biggest event so far, but as the world continues to warm, there is every reason to think these fires will become more frequent and more intense,” says lead author Susan Solomon, the Lee and Geraldine Martin Professor of Environmental Studies at MIT. “It’s another wakeup call, just as the Antarctic ozone hole was, in the sense of showing how bad things could actually be.”

The study’s co-authors include Kane Stone, a research scientist in MIT’s Department of Earth, Atmospheric, and Planetary Sciences, along with collaborators at multiple institutions including the University of Saskatchewan, Jinan University, the National Center for Atmospheric Research, and the University of Colorado at Boulder.

Chemical trace

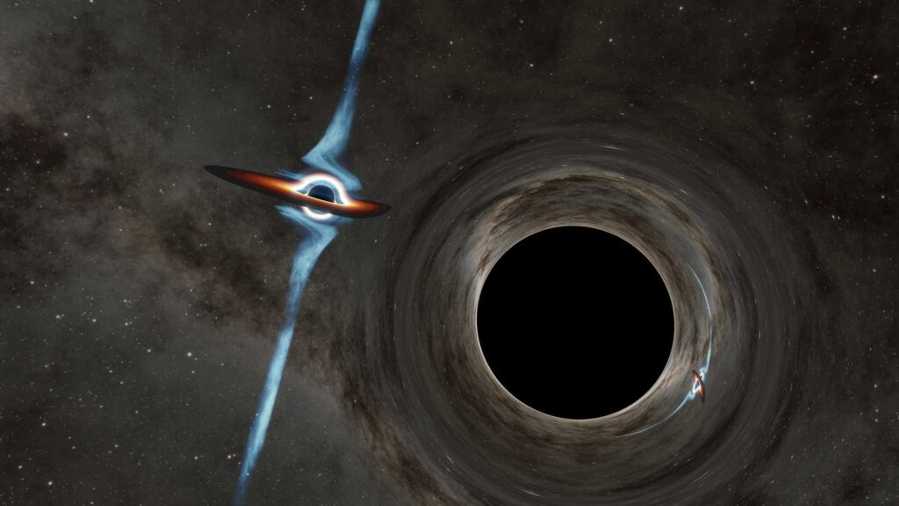

Massive wildfires are known to generate pyrocumulonimbus — towering clouds of smoke that can reach into the stratosphere, the layer of the atmosphere that lies between about 15 and 50 kilometers above the Earth’s surface. The smoke from Australia’s wildfires reached well into the stratosphere, as high as 35 kilometers.

In 2021, Solomon’s co-author, Pengfei Yu at Jinan University, carried out a separate study of the fires’ impacts and found that the accumulated smoke warmed parts of the stratosphere by as much as 2 degrees Celsius — a warming that persisted for six months. The study also found hints of ozone destruction in the Southern Hemisphere following the fires.

Solomon wondered whether smoke from the fires could have depleted ozone through a chemistry similar to volcanic aerosols. Major volcanic eruptions can also reach into the stratosphere, and in 1989, Solomon discovered that the particles in these eruptions can destroy ozone through a series of chemical reactions. As the particles form in the atmosphere, they gather moisture on their surfaces. Once wet, the particles can react with circulating chemicals in the stratosphere, including dinitrogen pentoxide, which reacts with the particles to form nitric acid.

Normally, dinitrogen pentoxidereacts with the sun to form various nitrogen species, including nitrogen dioxide, a compound that binds with chlorine-containing chemicals in the stratosphere. When volcanic smoke converts dinitrogen pentoxide into nitric acid, nitrogen dioxide drops, and the chlorine compounds take another path, morphing into chlorine monoxide, the main human-made agent that destroys ozone.

“This chemistry, once you get past that point, is well-established,” Solomon says. “Once you have less nitrogen dioxide, you have to have more chlorine monoxide, and that will deplete ozone.”

Cloud injection

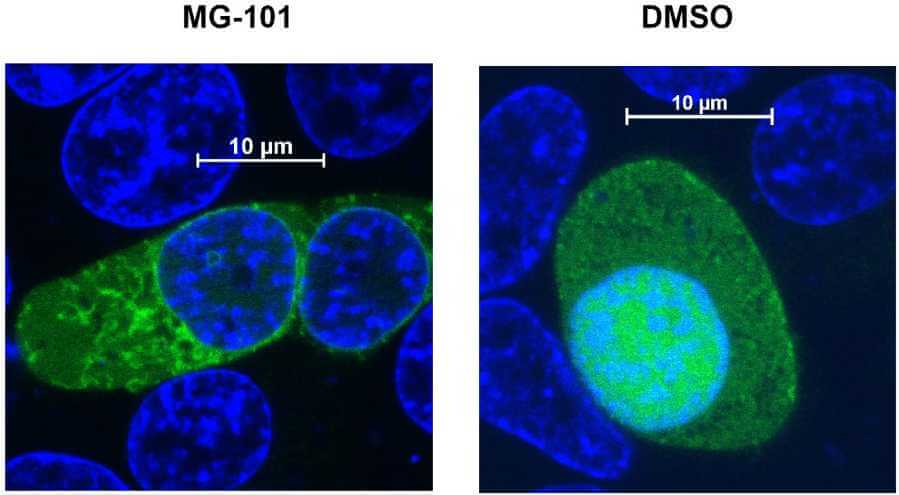

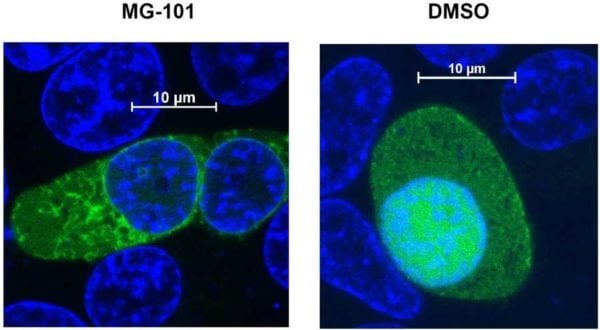

In the new study, Solomon and her colleagues looked at how concentrations of nitrogen dioxide in the stratosphere changed following the Australian fires. If these concentrations dropped significantly, it would signal that wildfire smoke depletes ozone through the same chemical reactions as some volcanic eruptions.

The team looked to observations of nitrogen dioxide taken by three independent satellites that have surveyed the Southern Hemisphere for varying lengths of time. They compared each satellite’s record in the months and years leading up to and following the Australian fires. All three records showed a significant drop in nitrogen dioxide in March 2020. For one satellite’s record, the drop represented a record low among observations spanning the last 20 years.

To check that the nitrogen dioxide decrease was a direct chemical effect of the fires’ smoke, the researchers carried out atmospheric simulations using a global, three-dimensional model that simulates hundreds of chemical reactions in the atmosphere, from the surface on up through the stratosphere.

The team injected a cloud of smoke particles into the model, simulating what was observed from the Australian wildfires. They assumed that the particles, like volcanic aerosols, gathered moisture. They then ran the model multiple times and compared the results to simulations without the smoke cloud.

In every simulation incorporating wildfire smoke, the team found that as the amount of smoke particles increased in the stratosphere, concentrations of nitrogen dioxide decreased, matching the observations of the three satellites.

“The behavior we saw, of more and more aerosols, and less and less nitrogen dioxide, in both the model and the data, is a fantastic fingerprint,” Solomon says. “It’s the first time that science has established a chemical mechanism linking wildfire smoke to ozone depletion. It may only be one chemical mechanism among several, but it’s clearly there. It tells us these particles are wet and they had to have caused some ozone depletion.”

She and her collaborators are looking into other reactions triggered by wildfire smoke that might further contribute to stripping ozone. For the time being, the major driver of ozone depletion remains chlorofluorocarbons, or CFCs — chemicals such as old refrigerants that have been banned under the Montreal Protocol, though they continue to linger in the stratosphere. But as global warming leads to stronger, more frequent wildfires, their smoke could have a serious, lasting impact on ozone.

“Wildfire smoke is a toxic brew of organic compounds that are complex beasts,” Solomon says. “And I’m afraid ozone is getting pummeled by a whole series of reactions that we are now furiously working to unravel.”

This research was supported in part by the National Science Foundation and NASA.

from ScienceBlog.com https://ift.tt/Edme5OH