While the global picture of Open Access remains something of a patchwork (see our recent blog post The Changing Landscape of Open Access Compliance), trends are nevertheless moving in broadly the same direction, with the past decade seeing a move globally from 70% of all publishing being closed access to 54% being open access.

The White House OSTP’s new memo (aka the Nelson Memo) will see this trend advance rapidly in the United States, stipulating that federally-funded publications and associated datasets should be made publicly available without embargo.

In this blog post, Symplectic‘s Kate Byrne and Figshare‘s Andrew Mckenna-Foster start to unpack what the Nelson Memo means, along with some of the impacts, considerations and challenges that research institutions and librarians will need to consider in the coming months.

Demystifying the Nelson Memo’s recommendations

The focus of the memo is upon ensuring free, immediate, and equitable access to federally funded research.

The first clause of the memo is focused on working with the funders to ensure that they have policies in place to provide embargo-free, public access to research.

The second clause encourages the development of transparent procedures to ensure scientific and research integrity is maintained in public access policies. This is a complex and interesting space, which goes beyond the remit of what we would perhaps traditionally think of as ‘Open Access’ to incorporate elements such as transparency of data, conflicts of interest, funding, and reproducibility (the latter of which is of particular interest to our sister company Ripeta, who are dedicated to building trust in science by benchmarking reproducibility in research).

The third clause recommends that federal agencies coordinate with the OSTP in order to ensure equitable delivery of federally-funded research results in data. While the first clause mentions making supporting data available alongside publications, this clause takes a broader stance toward sharing results.

What does this mean for institutions and faculty?

The Nelson memo introduces a clear set of challenges for research institutions, research managers, and librarians, who now need to consider how to put in place internal workflows and guidance that will enable faculty to easily identify eligible research and make it openly available, how to support multiple pathways to open access, and how to best engage and incentivize researchers and faculty.

However, the OSTP has made very clear that this is not in fact a mandate, but rather a non-binding set of recommendations. While this certainly relieves some of the potential immediate pressure and panic around getting systems and processes in place, it is clear that what this move does represent is the direction of travel that has been communicated to federal funders.

Funders will look at the Nelson Memo when reviewing their own policies, and seek alignment when setting their own policy requirements that drive action for faculty members across the US. So while the memo does not in itself mandate compliance for institutions, universities, and research organizations, it will have a direct impact on the activities faculty are being asked to complete – increasing the need for institutions to offer faculty services and support to help them easily comply with their funders requirements.

How have funders responded so far?

We are already seeing clear indications that funders are embracing the recommendations and preparing next steps. Rapidly after the announcement, the NIH published a statement of support for the policy, noting that it has “long championed principles of transparency and accessibility in NIH-funded research and supports this important step by the Biden Administration”, and over the coming months will “work with interagency partners and stakeholders to revise its current Public Access Policy to enable researchers, clinicians, students, and the public to access NIH research results immediately upon publication”.

Similarly, the USDA tweeted their support for the guidance, noting that “rapid public access to federally-funded research & data can drive data-driven decisions & innovation that are critical in our fast-changing world.”

How big could the impact be?

While it will take some time for funders to begin to publish their updated OA Policies, there have been some early studies which seek to assess how many publications could potentially fall under such policies.

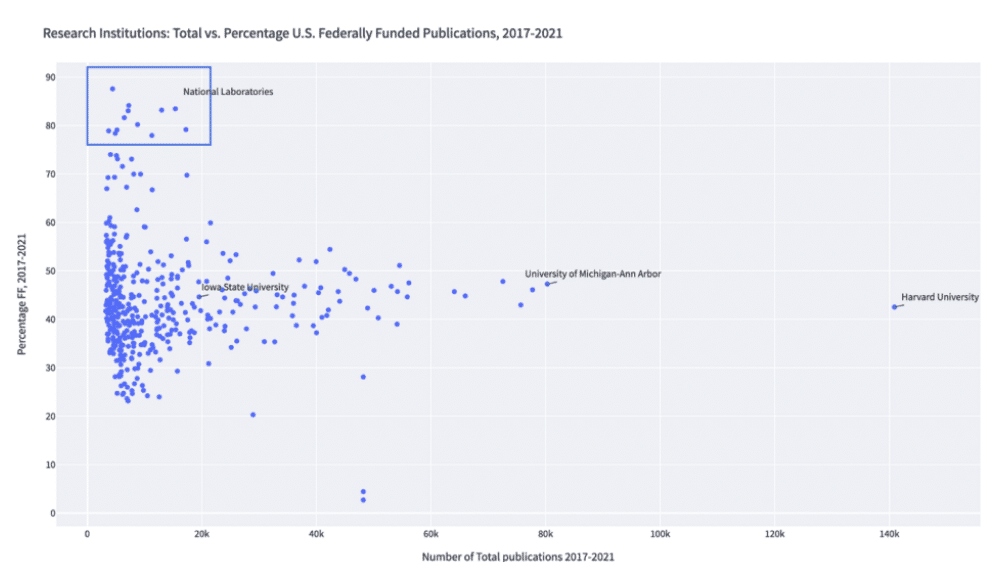

A recent preprint by Eric Schares of Iowa State University [Impact of the 2022 OSTP Memo: A Bibliometric Analysis of U.S. Federally Funded Publications, 20217-2021] used data from Dimensions to identify and analyse publications with federal funding sources. Schares found that:

- 1.32 million publications in the US were federally funded between 2017-2021, representing 33% of all US research outputs in the same period.

- 32% of federally funded publications were not openly available to the public in 2021 (compared to 38% of worldwide publications during the same period).

Schares’ study included 237 federal funding agencies – due to the removal of the $100m threshold, many more funders now fall under the Nelson memo than under the previous 2013 Holdren memo. This makes it likely that disciplines who previously were not impacted will now find themselves grappling with public access requirements.

In Schares’ visualization here, where each dot represents a research institution, we can see that two main groupings emerge. The first is a smaller group made up of the National Laboratories. They publish a smaller number of papers overall, but are heavily federally funded (80-90% of their works). The second group is a much larger cluster, representing Universities across the US. Those organisations have 30 – 60% of their publications being federally-funded, but building from a much larger base number of publications – meaning that they will likely have a lot of faculty members who will now need support.

Where do faculty members need support?

According to the 2022 State of Open Data Report, institutions and libraries have a particularly essential role to play in meeting new top-down initiatives, not only by providing sufficient infrastructure but also support, training and guidance for researchers. It is clear from the findings of the report that the work of compliance is wearing on researchers, with 35% of respondents citing lack of time as reason for not adhering to data management plans and 52% citing finding time to curate data as the area they need the most help and support with. 72% of researchers indicated they would rely on an internal resource (either colleagues, the Library or the Research Office) were they to require help with managing or making their data openly available.

How to start?

Institutions who invest now in building capacity in these areas to support open access and data sharing for researchers will be better prepared for the OSTP’s 2025 deadline, helping to avoid any last-minute scramble to support their researchers in meeting this guidance.

Beginning to think about enabling open access can be a daunting task, particularly for institutions who don’t yet have internal workflows or appropriate infrastructure set up, so we recommend breaking down your approach into more manageable chunks:

1. Understand your own Open Access landscape

- Find out where your researchers are publishing and what OA pathways they are currently using. You can do this by reviewing your scholarly publishing patterns and the OA status of those works.

- Explore the data you have for your own repositories – not only your own existing data sets, but also those from other sources such as data aggregators or tools like Dimensions.

- Begin to overlay publishing data with grants data, to benchmark where you are now and work to identify the kinds of drivers that your researchers are likely to see in the future.

2. Review your system capabilities

- Is your repository ready for both publications and data?

- Do you have effective monitoring and reporting capabilities that will help you track engagement and identify areas where your community may need more support? Are your systems researcher-friendly; how quickly and easily can a researcher make their work openly available??

3. Consider how you will support your research ecosystem

- Identify how you plan to support and incentivize researchers, considering how you will provide guidance about compliant ways of making work openly available, as well as practical support where relevant.

- Plan communication points between internal stakeholders (e.g. Research Office, Library, IT) to create a joined-up approach that will provide a shared and seamless experience to your researchers.

- Review institutional policies and procedures relating to publishing and open access, considering where you are at present and where you’d like to get to.

How can Digital Science help?

Symplectic Elements was the first commercially available research information management system to be “open access aware”, connecting to institutional digital repositories in order to enable frictionless open access deposit for publications and accompanying datasets. Since 2009 through initial integration with DSpace – later expanding our repository support to Figshare, EPrints, Hyrax, and custom home-grown systems – we have partnered with and guided many research institutions around the globe as they work to evolve and mature their approach to open access. We have deep experience in building out tools and processes which will help universities meet mandates set by national governments or funders, report on fulfilment and compliance, and engage researchers in increasing levels of deposit.

Our sister company Figshare is a leading provider of cloud repository software and has been working for over a decade to make research outputs, of all types, more discoverable and reusable and lower the barriers of access. Meeting and exceeding many of the ‘desirable characteristics’ set out by the OSTP themselves for repositories, Figshare is the repository of choice for over 100 universities and research institutions looking to ensure their researchers are compliant with the rising tide of funder policies.

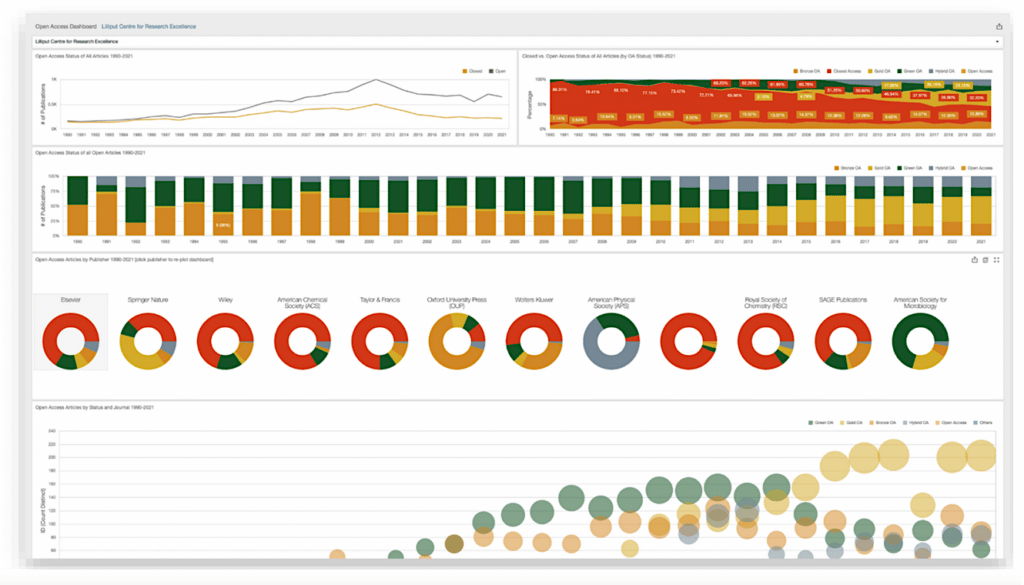

Below is an example of the type of Open Access dashboard that can be configured and run using the various collated and curated scholarly data held within Symplectic Elements.

In this example, we are using Dimensions as a data source, building on data from Unpaywall about the open access status of works within an institution’s Elements system. Using the data visualizations within this dashboard, you can start to look at open access trends over time, such as the different sorts of open access pathways being used, and how that pattern changes when you look across different publishers or different journals, or for different departments within your organization. By gaining this powerful understanding of where you are today, you can begin to think about how to best prioritise your efforts for tomorrow as you continue to mature your approach to open access.

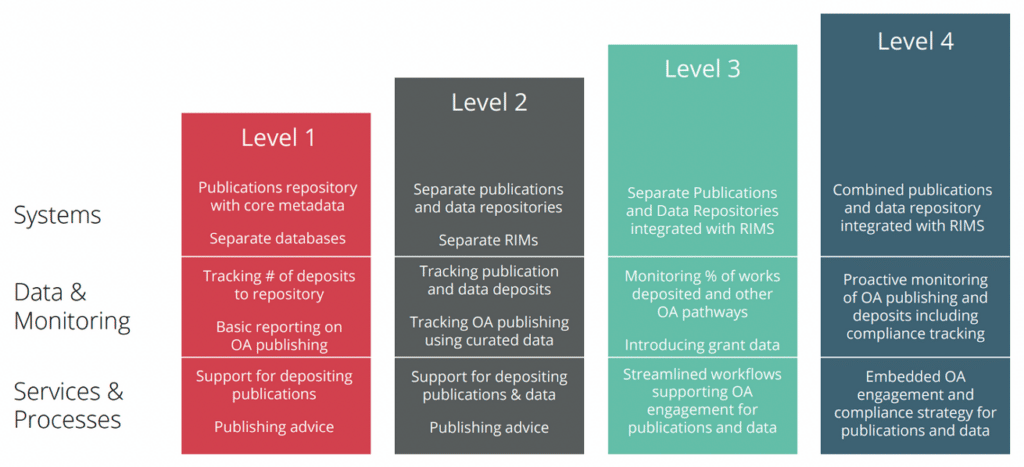

You might find yourself at Level 1 right now where you have a publications repository along with some metadata, and you’re able to track a number of deposits and do some basic reporting, but there are a number of ways that you can build this up over time to create a truly integrated OA solution. By bringing together publications and data repositories and integrating them within a research management solution, you can enter a space where you can monitor proactively, with an embedded engagement and compliance strategy across all publications and data.

For more information or if you’d like to set up time to speak to the Digital Science team about how Symplectic Elements or Figshare for Institutions can support and guide you in your journey to a fully embedded and mature Open Access strategy, please get in touch – we’d love to hear from you.

This blog post was originally published on the Symplectic website.

The post White House OSTP public access recommendations: Maturing your institutional Open Access strategy appeared first on Digital Science.

from Digital Science https://ift.tt/nt7dqGf