Particles swirling around our atmosphere add to climate change, yet much about how they interact with sunlight and influence the seeding of clouds remains puzzling. Studies are lifting the lid on how these tiny particles influence something as big as climate by analysing them from jet aircraft, satellites and ground measurements.

The leading cause of climate change is rising levels of carbon dioxide in the atmosphere. This rise has been happening since the start of the Industrial Revolution and we now know a lot about how this gas behaves, traps heat and warms the Earth.

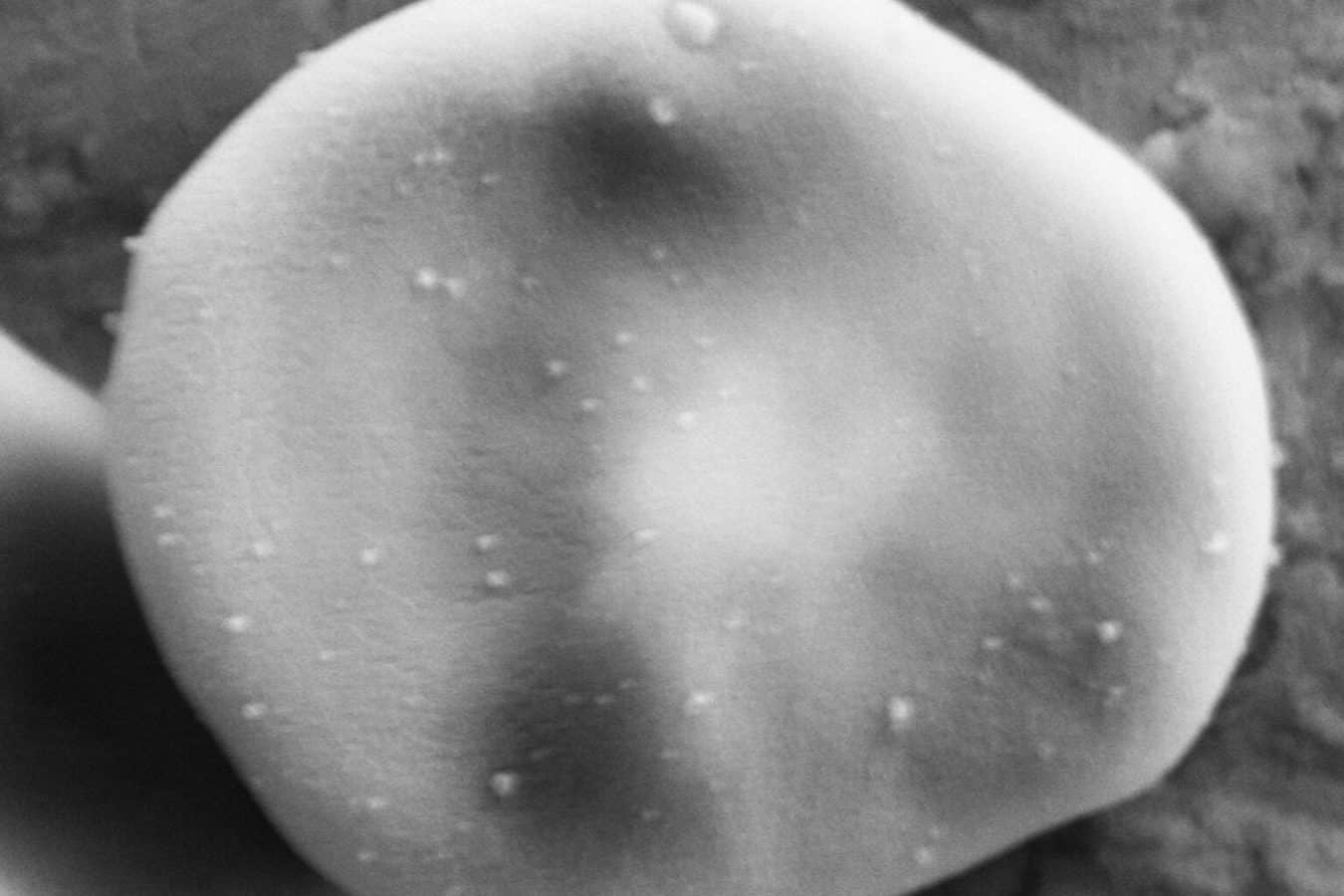

A far more mysterious influence on climate comes from particles – or aerosols – suspended in air. Especially important is black carbon, the soot wafting off from burning vegetation and traffic fumes. This black stuff ranks as the second largest contributor to climate change. But it is very different from carbon dioxide.

‘While carbon dioxide stays in the air for hundreds of years, black carbon lives on for just weeks in the atmosphere,’ explained Professor Bernadett Weinzierl, atmospheric and aerosol scientist at the University of Vienna, Austria.

Carbon dioxide is a gas that mixes so well that its concentrations are pretty much the same over Naples as over Hawaii. On the other hand, the quantity and type of aerosol particles in the atmosphere vary depending on where you look.

The effects of particle types differ too. Black carbon absorbs heat and causes the air to warm. Mineral dust absorbs light, but not as strongly. Some other particles reflect light away from the Earth. Scientists must carry out a bookkeeping exercise, totting up how much some particles warm the Earth, subtracting how much others cool our planet.

Complicating the picture, particles seed the water droplets that eventually make up clouds. The types of particles influence the properties of those clouds.

Pollutants

Prof. Weinzierl tracks man-made pollutants and natural particles like dust from deserts in the atmosphere in a project called A-LIFE.

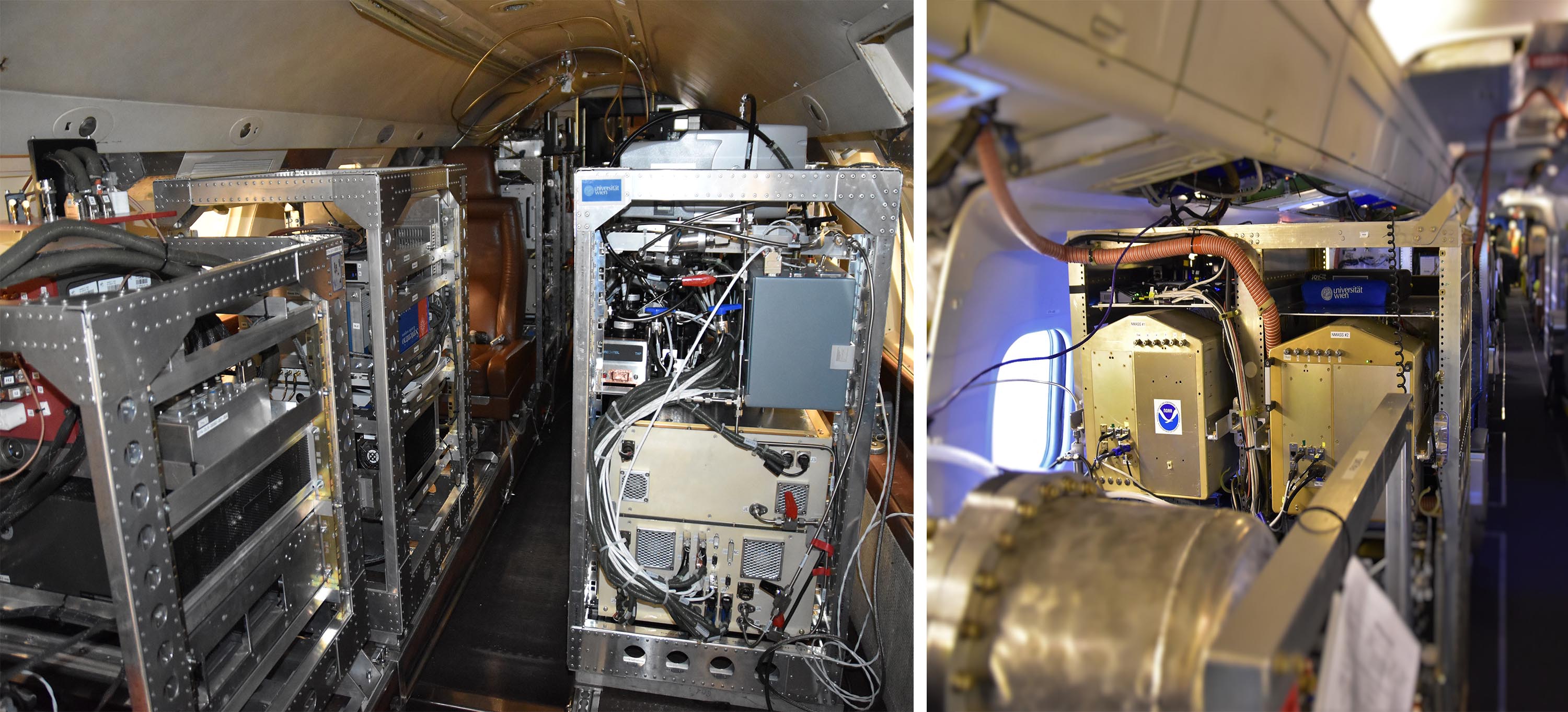

To sample what is floating around in our skies, project scientists flew the twin-engine Dassault Falcon 20 from the German Aerospace Centre (DLR) in the eastern Mediterranean. During 22 flights, the aircraft took in air to analyse the particles swirling above and around Cyprus in 2017.

The eastern Mediterranean is an ideal location, because it contains soot from biomass burning, dust from the Sahara Desert and from the Arabian Desert and sulphates and black carbon from traffic and industrial fumes. The aircraft flew as low as 300 metres and as high as 12 km. It also used lasers to track particles in the air.

Prof. Weinzierl observed that when lots of dust was present, even local weather forecasts tended to be less accurate. Similarly, such particles can fog predictions about climate.

The Austrian professor also took part in an experiment with a NASA research aircraft that flew from the North Pole down to the middle of the Pacific Ocean, to the outer rim of Antarctica and back up the Atlantic Ocean. This mission allowed her to compare particle cocktails in pristine skies from away from human influence, with those in the highly polluted skies over the eastern Mediterranean.

Prof. Weinzierl found more large particles, such as mineral dust, high in the atmosphere than had been predicted. Even in regions in the northern hemisphere, very far away from sources, 10 to 20 micron particles were regularly found in the air, says Prof. Weinzierl. Human hair measures 100 microns across, for comparison, while black carbon consists of particles less than one micron across.

On the other hand, there was less black carbon present than the professor expected high in the atmosphere. ‘We find that models have more black carbon in the upper troposphere than we find in nature,’ she said. ‘There is less warming then, than the models would predict, from black carbon.’

One explanation could be that more black carbon is being washed out of the atmosphere by rain than the models predict.

Clouds

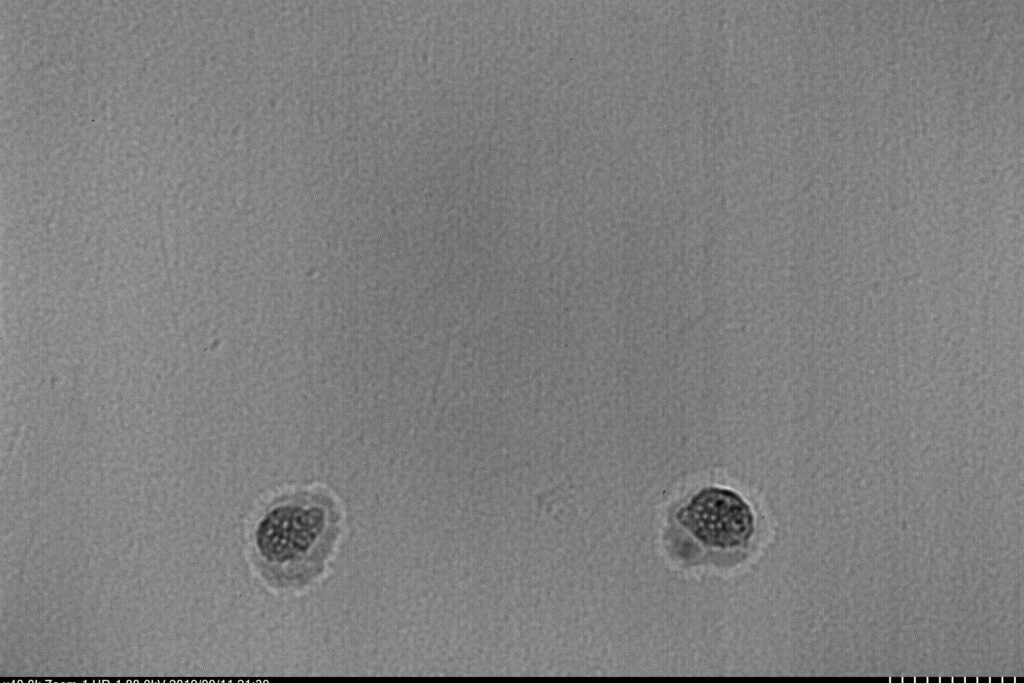

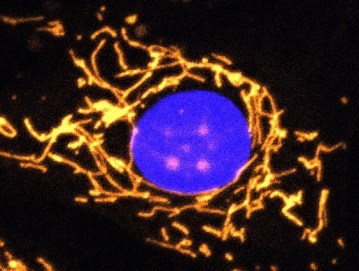

Aerosol particles are critical for cloud formation, and different types affect how the clouds will behave.

‘Every single cloud droplet normally forms on an aerosol particle, because clouds cannot form from pure water under atmospheric conditions,’ said Philip Stier, professor of atmospheric physics at the University of Oxford, in the UK. Cloud droplets can start around molecules emitted by plants, sulphurous compounds spewed from volcanoes or soot from vehicle tailpipes, and more.

But the science of aerosols and cloud formation is perplexing – and aerosol characteristics are important. ‘You need to know about their size, about their composition and how they are mixing together,’ said Prof. Stier. For example, floating sea salt quickly absorbs moisture, whereas pure black carbon tends to repel water.

Clouds themselves will then differ according to how they were seeded. ‘A cloud in a polluted area will generally start from more aerosols and so form more droplets,’ said Prof. Stier. At the end a cloud formed around tiny man-made particles will usually have more abundant water, smaller droplets.

Clouds built around salt or desert dust generally contain fewer droplets, but each droplet – like the particles they form around – is larger. ‘If the air is very clean, then often cloud droplets start much bigger, and these clouds can rain out very easily,’ added Prof. Stier. ‘But the real question is how aerosols affect precipitation on larger scales.’

Clouds with pollutant aerosols contain more water droplets and appear brighter. This reflects light, cooling the atmosphere. ‘It could be also that such clouds live longer,’ said Prof. Stier, ‘but these effects remain uncertain.’

Aerosol particles and clouds introduce uncertainties into climate predictions. Their complexities and difficulties in terms of calculations mean that scientists still struggle to understand clouds at microscopic scales and at large scales. But progress is being made.

Prof. Stier studied how aerosols influence convective clouds as part of the ACCLAIM project. Convective clouds form when warm air rises and include the fluffy cumulus clouds you might see on a summer day. They are poorly represented in climate models, says Prof. Stier, but new stationary satellites are helping to better track them.

Heat

Prof. Stier is now investigating how aerosol particles in our skies influence rainfall in a project called RECAP. He studies the energy balance of the atmosphere on small scales and across expansive cloud fields. For example, when it rains, latent heat is released into the atmosphere.

The energy balance of the atmosphere varies. ‘In the tropics, we actually get a local enhancement of precipitation (caused by absorbing aerosols, like black carbon),’ Prof. Stier explained, ‘but at mid-latitudes, where the rotation of the Earth exerts a stronger effect and it is not so easy to divert energy away, we get a very strong decrease in precipitation.’

Artificial intelligence is being used by Prof Stier to crunch and understand masses of data being collected on the movement and effects of particles on clouds and rainfall.

Meanwhile, Prof Weinzierl’s group continues to analyse the A-LIFE data. The Austrian group developed new methods, including a smart cloud algorithm for the ATom flights. ‘It looks at the data and then says whether you are inside or outside a cloud,’ Prof. Weinzierl said, and ‘about the type of cloud.’

Discoveries have piled up. Prof. Weinzierl confirmed that the lifetime of black carbon in the high atmosphere is shorter than had been assumed in climate studies.

Also, her group contributed to the discovery that a natural sulphur compound is important for starting cloud formation in marine atmosphere. They also helped reveal that newly formed particles high in the atmosphere over the tropics help to seed clouds as they coagulate and descend in the atmosphere.

Understanding how particles influence clouds – and ultimately climate – has been a huge hurdle for scientists. But it is a hurdle they are overcoming by better measurements of particles and a better understanding of their interactions and impact on clouds and climate.

The research in this article was funded by the EU’s European Research Council. If you liked this article, please consider sharing it on social media.

Published by Horizon

from ScienceBlog.com https://ift.tt/3lnAZMP